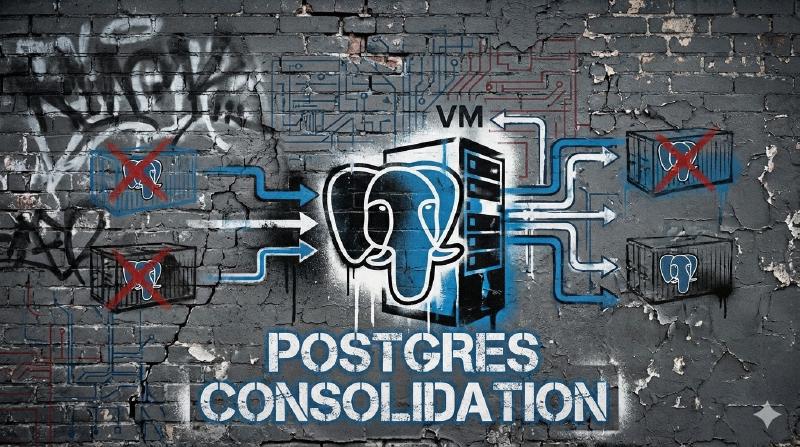

If you’re running more than a couple of Docker stacks, chances are you’re also running more PostgreSQL containers than you want to admit. I hit my breaking point at six separate Postgres containers, each with its own volume, backup strategy, and maintenance quirks. Every time I ran: docker compose pull && docker compose up -d it felt risky.

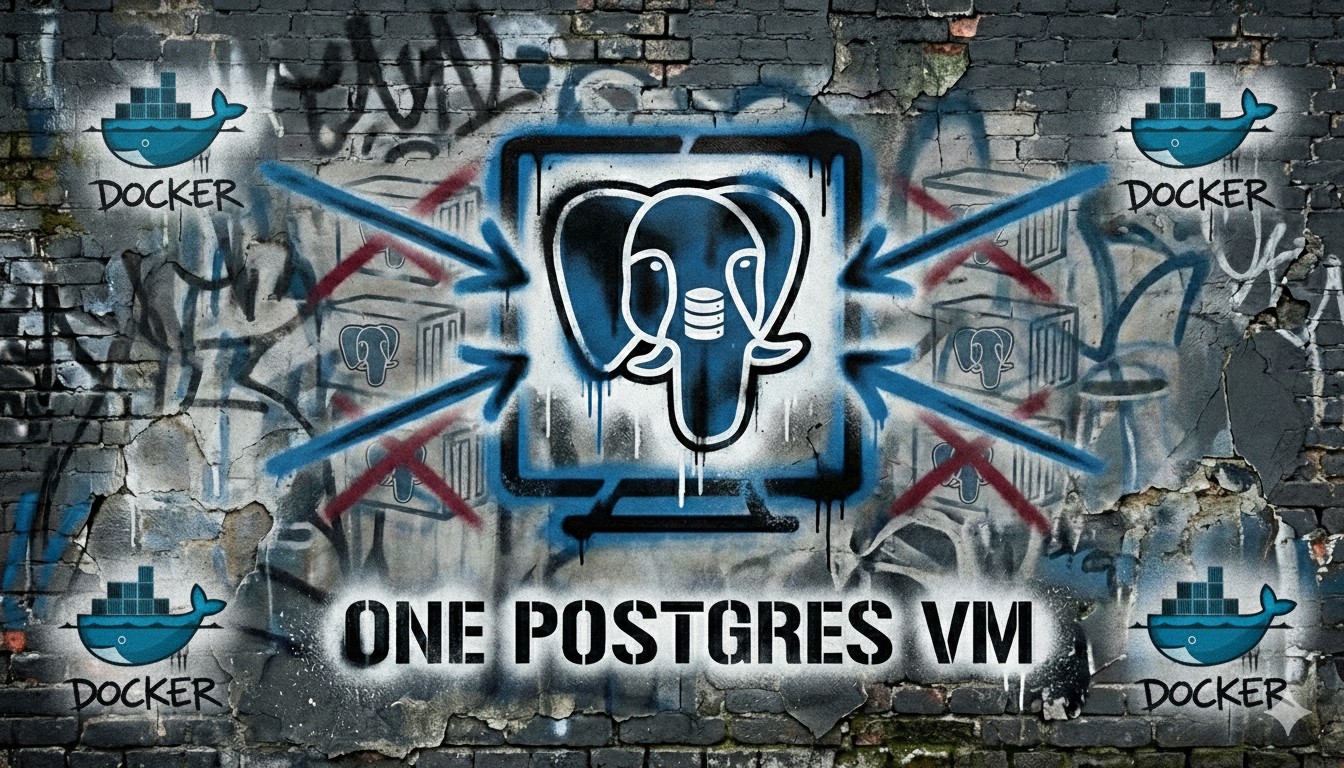

Consolidating PostgreSQL databases into a single dedicated server eliminates scattered backups, reduces resource bloat, and makes future migrations trivial, which is exactly what you want from a database. In this guide, you’ll learn how to install PostgreSQL on Debian, configure remote database access, and migrate Docker PostgreSQL containers to a centralized VM without breaking your apps or losing data.

This PostgreSQL migration guide moves you from multiple Docker-based instances to one dedicated server running in a Debian 13 VM on Proxmox. Expected downtime: 10-30 minutes per app during migration. Risk level: Medium, but I’ll also cover recovery strategies.

This is written for users who already understand Docker, basic Linux administration, and Postgres fundamentals, but want a clean, repeatable way to consolidate databases.

pg_dump, then update your Docker stacks to point at the new centralized server for simpler management and backups.

Why a Dedicated PostgreSQL VM Beats Multiple Containers

Before running any commands, let’s talk about why this is worth the effort.

When each Docker stack runs its own Postgres container, you’re dealing with:

- Duplicated memory and CPU usage across every instance

- Backups scattered across volumes (good luck finding the right one when you need it)

- Version upgrades happening at different times (or not at all)

- Monitoring and tuning that’s inconsistent at best

A single PostgreSQL server in a VM gives you:

- One place to back up and restore, your future self will thank you

- Centralized performance tuning that actually matters

- Cleaner Docker stacks with fewer moving parts

- Easier upgrades and security patching (do it once, not six times)

Here’s the thing: databases need to be treated as infrastructure, not app dependencies. Treating Postgres like shared infrastructure pays off quickly. I wish I’d done this years ago.

More details on why you should do this:

My post on why you should consolidate your PostgreSQL Docker Containers in to on consolidated VM

Prepare the Proxmox VM (Debian 13)

VM Sizing and Creation

In Proxmox, create a new VM with these baseline specs. These specs work well for 5 to 10 typical homelab apps:

- OS: Debian 13 ISO

- CPU: 2 to 4 cores

- RAM: 4 to 8 GB

- Disk: 20 to 50 GB SSD, VirtIO

- Network: Bridge to your homelab subnet

- Enable QEMU Guest Agent in VM options

PostgreSQL benefits more from RAM and fast storage than raw CPU. If you have the memory to spare, err on the side of more, you won’t regret it.

Install Debian 13 (Minimal)

During the Debian installer:

- Choose a minimal install, no desktop environment (you don’t need it)

- Assign a static IP, saves headaches later

- Install

openssh-server, so you can manage the VM remotely with SSH

After the first login, update the system and install the QEMU guest agent:

sudo apt update && sudo apt upgrade -y

sudo apt install -y qemu-guest-agent

sudo systemctl enable --now qemu-guest-agent

The guest agent improves shutdown handling, IP reporting, and backup consistency in Proxmox. It’s not required, but it’s one of those “install it now, appreciate it later” things.

This compact mini-workstation offers powerful CPU options and serious I/O, making it ideal for running a consolidated PostgreSQL server in a Proxmox VM with room for future growth.

Install PostgreSQL on Debian 13

Decision: Default Debian Repo or Official PostgreSQL Repo?

Alright, this is the first real fork in the road. Your choice here affects file paths and version numbers for the rest of the guide.

Option A: Debian default repository (RECOMMENDED)

- Pros: simpler, stable, fewer surprises

- Cons: version may lag behind upstream (Debian 13 uses PostgreSQL 17)

- Install paths:

/etc/postgresql/17/main/ - Service name:

postgresql

Install with:

sudo apt update

sudo apt install -y postgresql postgresql-contrib

Option B: Official PostgreSQL APT repository

- Pros: access to PostgreSQL 18 or newer features

- Cons: slightly more setup complexity, one more thing to maintain

- Install paths:

/etc/postgresql/18/main/ - Service name:

postgresql

If you want the latest features:

sudo apt install -y curl ca-certificates

sudo install -m 0755 -d /etc/apt/keyrings

curl -o /etc/apt/keyrings/pgdg.asc https://www.postgresql.org/media/keys/ACCC4CF8.asc

sudo chmod a+r /etc/apt/keyrings/pgdg.asc

echo "deb [signed-by=/etc/apt/keyrings/pgdg.asc] https://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" | sudo tee /etc/apt/sources.list.d/pgdg.list

sudo apt update

sudo apt install -y postgresql-18 postgresql-contrib-18

/etc/postgresql/17/main/ with /etc/postgresql/18/main/ in all config file paths. I know, I know, it’s annoying, but that’s the price you pay for bleeding edge.

Verify the Service

PostgreSQL starts automatically after installation. Let’s make sure it’s actually running:

sudo systemctl status postgresql

sudo systemctl enable postgresql

If you see “active (running)”, you’re golden. Now access the admin shell:

sudo -i -u postgres psql

You should see the postgres=# prompt. Check your version with SELECT version();

Output should look like this:

postgres=# SELECT version();

version

-------------------------------------------------------------------------------------------------------------------

PostgreSQL 17.7 (Debian 17.7-0+deb13u1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 14.2.0-19) 14.2.0, 64-bit

Then exit with \q.

Its multiple NVMe slots and dual 10G SFP+ networking make it a practical, quiet, and efficient choice for hosting a dedicated PostgreSQL server and handling VM workloads.

Configure PostgreSQL for Remote Access

By default, PostgreSQL only listens on localhost. That’s good for security, but useless for Docker containers on other hosts. Let’s fix that.

Enable Network Listening

Edit postgresql.conf (adjust path for your version):

sudo nano /etc/postgresql/17/main/postgresql.conf

Find and set:

listen_addresses = '*'

This allows PostgreSQL to listen on all interfaces. Don’t worry, access control is handled separately, so you’re not opening the floodgates here.

Configure Client Authentication

Edit pg_hba.conf:

sudo nano /etc/postgresql/17/main/pg_hba.conf

Add a rule for your Docker subnet(s) and your LAN. For example:

# LAN Subnet

host all all 172.27.0.0/24 scram-sha-256

# Docker 1 Subnet

host all all 172.17.0.0/16 scram-sha-256

# Docker 2 Subnet

host all all 172.18.0.0/16 scram-sha-256

Use scram-sha-256 authentication instead of md5 where possible. It’s more secure and the modern default. Your Docker clients will handle it fine.

Restart PostgreSQL:

sudo systemctl restart postgresql

Firewall the Database

Never expose PostgreSQL to the internet. Just don’t. Lock it down to your trusted subnets.

Using UFW:

sudo apt install -y ufw

sudo ufw allow from 172.17.0.0/16 to any port 5432 proto tcp

sudo ufw enable

Verify it is working:

sudo ufw status

Adjust the subnet to match your Docker hosts or Proxmox bridge. If you’re not sure what subnet to use, check your Docker network with docker network inspect bridge.

It should look like this when you are done:

Status: active

To Action From

-- ------ ----

5432/tcp ALLOW 172.27.0.0/24

22/tcp ALLOW Anywhere

5432/tcp ALLOW 172.18.0.0/16

5432/tcp ALLOW 172.17.0.0/16

Checkpoint:

From another host or container already running Postgres, test connectivity.

docker exec -it <container_name> /bin/bash

Run this to test if the firewall is allowing traffic on this subnet

psql -h <vm_ip> -U postgres

If it connects and asks for a password, you’re good.

Press ctrl-c to abort the connection and exit to leave the container.

If it fails, check logs with:

journalctl -u postgresql

Nine times out of ten, it’s either pg_hba.conf or the firewall. Double-check both.

Create Users and Databases for Docker Apps

Here’s where a lot of people mess up: they dump everything into a single database with a single superuser. Resist that urge.

Identify Existing Containers

On your Docker host(s):

docker ps | grep postgres

Make a list of containers, databases, and users. Seriously, write it down or put it in a text file. You’ll reference it constantly.

Create a dedicated role and database per application. It makes troubleshooting and permissions way simpler later.

sudo -i -u postgres psql

Example:

CREATE ROLE ExampleUser WITH LOGIN PASSWORD 'StrongPasswordHere';

CREATE DATABASE example_db OWNER ExampleUser;

Repeat this for each former Docker-based Postgres instance. Yeah, it’s a bit tedious, but you only do it once.

Test access:

psql -h localhost -U ExampleUser -d example_db

If you see the example_db=> prompt, you’re set. Exit with \q.

example and example_db) makes your life so much easier six months from now when you’re trying to remember which database belongs to what.

Migrate Docker PostgreSQL Databases to Dedicated Server

Alright, this is the heart of the PostgreSQL migration process. Take your time here, rushing this step is how you end up restoring from backups (You have backups right? RIGHT?).

Handle Extensions and Ownership Issues First

Before dumping, identify potential problems. This saves you from “why won’t it restore?” headaches later:

# Check for extensions

docker exec -it <container> psql -U <user> -d <db> -c "\dx"

# Check for custom roles

docker exec -it <container> psql -U <user> -c "\du"

Common issues you’ll hit:

- Extensions like

pg_trgmoruuid-osspmay need manual creation on the target serverCREATE EXTENSION IF NOT EXISTS "uuid-ossp"; - Role ownership conflicts between different container dumps (especially if you’re using

pg_dumpall)

Dump the Data

Decision point here:

- Small homelab DBs (under a few GB): dumping while containers are running is usually fine

- Larger or critical DBs: stop the app container first to guarantee consistency

For single database (recommended):

docker exec -it <container> pg_dump -h 127.0.0.1 -U postgres -d example_db --format=plain --no-owner --no-privileges > example_dump.sql

The --no-owner --no-privileges flags avoid role and ownership conflicts during restore. You’ll set ownership manually on the new server anyway.

For all databases and roles (advanced, usually overkill for homelabs):

docker exec -it <container> -h 127.0.0.1 pg_dumpall -U <user> > full_dump.sql

Watch the output. If you see errors about missing permissions or roles, that’s your cue to use --no-owner --no-privileges.

Transfer Dumps to the VM

Use scp or VSCode to download and upload to the new server:

scp db_dump.sql user@postgres-vm:/tmp/

If you’ve got multiple dumps, throw them all in /tmp/ on the VM. Just remember to clean them up later.

Restore into the New Server

On the PostgreSQL VM:

psql -h localhost -U ExampleUser -d example_db -f /tmp/db_dump.sql

Repeat for each application. Yeah, it’s repetitive. Put on some music.

If you hit extension errors like “extension uuid-ossp does not exist”, install them manually:

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

Most common extensions you’ll need: uuid-ossp, pg_trgm, hstore. Install them as needed.

Great choice for a PostgreSQL database because its very high IOPS and low latency keep reads and writes snappy under load, while the sustained throughput helps with WAL logging, indexes, and vacuum/maintenance jobs without the whole system feeling sluggish.

Update Docker Stacks to Use the Central Database

This step removes PostgreSQL from your Docker stacks entirely. It’s weirdly satisfying.

In your docker-compose.yml:

- Comment out the

postgresservice (Remove once you know it works) - Comment out volume definitions tied to Postgres (Remove once you know it works)

- Update environment variables to point at the new server

Example:

environment:

POSTGRES_HOST: <postgres_VM_IP>

POSTGRES_DB: example_db

POSTGRES_USER: ExampleUser

POSTGRES_PASSWORD: StrongPasswordHere

Recreate the stack:

docker compose down

docker compose up -d

Your application should now connect to the centralized PostgreSQL server. Check the logs to make sure it’s connecting:

docker compose logs -f

If you see database connection errors, double-check your environment variables. Typos in the hostname or database name trip up 80% of people here.

Once you do this for one stack, the rest should be faster.

PostgreSQL Performance Tuning for Homelabs

Out of the box PostgreSQL settings are conservative. Here’s safe starting points for a homelab:

Edit postgresql.conf:

shared_buffers = 1GB

work_mem = 16MB

max_connections = 100

Why these settings matter:

shared_buffers: PostgreSQL’s main cache. Rule of thumb is 25% of system RAM, but too much can actually hurt performance on smaller systems. For a 4GB VM, 1GB is the sweet spot.work_mem: Per-operation memory for sorts and joins. This multiplies by concurrent connections, so be conservative. 16MB is safe for most homelabs.max_connections: Many apps hold idle connections. 100 is safe for most homelabs, you’re probably not hitting 50 concurrent connections anyway.

work_mem = 64MB and 100 connections could theoretically use 6.4GB of RAM during heavy queries. This is how you OUT OF MEMORY (OOM) kill your databases.

Restart PostgreSQL after changes:

sudo systemctl restart postgresql

These settings work well for read-heavy media workloads. If you’re running something write-heavy, you’ll want to tune further, but this is a good baseline.

Troubleshooting PostgreSQL Migration Issues

Connection Refused from Docker Containers

This is the most common issue. Here’s the checklist:

- Confirm PostgreSQL is listening:

ss -lntp | grep 5432 - Verify

listen_addresses = '*'is set and not commented out - Check firewall rules with

sudo ufw status - Double-check

pg_hba.confsubnet and authentication method

If you’re still stuck, check the logs:

journalctl -u postgresql -n 50

Look for “connection refused” or “no pg_hba.conf entry” messages. They’ll tell you exactly what’s wrong.

pg_dump Fails with “Role Does Not Exist”

This usually means roles from the old container don’t exist yet on the new server.

Fix by either:

- Creating the roles first with

CREATE ROLE, or - Using

--no-owner --no-privilegesduring dump and restore (recommended)

Using --no-owner --no-privileges is cleaner. You’re not trying to preserve complex permission structures, you just want the data.

Authentication Errors After Migration

Frustrating, I know. Check these:

- Ensure passwords match what’s in Docker environment variables (no trailing spaces)

- Confirm

scram-sha-256is supported by your client library (it should be) - Check for copy-paste errors in passwords, seriously, this happens more than you’d think

If you’re still stuck, temporarily switch to md5 in pg_hba.conf to isolate the issue. If that works, it’s a scram-sha-256 compatibility problem.

Performance Worse Than Before

Wait, what? Yeah, this can happen. Here’s why:

- Your containers may have been memory-starved without you realizing it, so they were “fast” because they weren’t doing much

- The new server is actually doing proper caching and query planning

- You need to increase VM RAM or revisit

shared_buffersandwork_mem

Run EXPLAIN ANALYZE on slow queries to see what’s happening. Usually it’s just a matter of giving PostgreSQL more memory to work with.

Extension Errors During Restore

If you see “extension does not exist” errors, install them manually:

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

CREATE EXTENSION IF NOT EXISTS "pg_trgm";

Most homelab apps use a handful of common extensions. Install them once and you’re done.

FAQs

➤ How do I connect to PostgreSQL from Docker containers on a different host?

pg_hba.conf, and ensure your firewall permits port 5432 only from trusted subnets. Never open it to the internet, really, don’t do it.➤ What's the safest way to migrate a large Docker PostgreSQL database without downtime?

pg_dump, restore, then restart the app pointing at the new server. Trying to do zero-downtime migrations in a homelab is usually more trouble than it’s worth.➤ Why can't I access PostgreSQL after install?

listen_addresses and pg_hba.conf, then restart the service. This trips up everyone the first time.➤ Should I use Debian repos or the official PostgreSQL repo?

➤ How much RAM and CPU should I allocate to a PostgreSQL VM?

➤ Can I migrate Docker PostgreSQL databases while containers are running?

➤ What's the QEMU guest agent used for?

Conclusion

Consolidating multiple Docker-based PostgreSQL instances into a single dedicated server is one of those changes that feels intimidating but pays off immediately. You reduce clutter, simplify backups, and gain real control over your data layer.

The key takeaways:

- Use a dedicated Debian 13 VM for PostgreSQL, treat it as one of your most important VMs

- Keep one user and database per application (makes troubleshooting way easier)

- Migrate with

pg_dump --no-owner --no-privileges, not filesystem copies - Lock down network access to trusted subnets only

- Handle extensions and roles proactively during migration

If you’re tired of babysitting half a dozen Postgres containers like I was, this approach will make your homelab cleaner and more predictable.