6 AM. Power came back after an outage. My Proxmox host boots up. The VM that runs my NAS starts… slowly. My Jellyfin container mounts /mnt/media before the NFS share is ready. The mount succeeds but points to an empty directory. My library: “No items found.”

I fixed it in 10 minutes. But I’d already done this dance multiple times every week for eight months and I hated having to manually mount the drives everytime the server boots.

The problem wasn’t the hardware. It wasn’t the software. It was the architecture: I was running my storage as a VM on my compute host. Every reboot was a roll of the dice for timing and mount order.

So, I pulled the HBA and drives out of my Proxmox host and moved them to a dedicated bare metal Debian box running MergerFS with 93TB of drives and SMB and NFS shares.

That was six months ago. I’ve rebooted my compute host 30+ times since then. Zero mount failures, race conditions, or extended debugging sessions late at night. Just my media being served up when I need it.

If your media library matters, give it its own box. I go over how I built mine and why, and why you should dedicate a box to it as well.

If you are trying to figure out the best NAS setup for a home media server, here is the short version: stop running your storage in a VM and give it its own bare metal box that exists only to keep your files safe and available when you want them.

Quick picks: best NAS options for media servers (2025)

If you came here looking for the “just tell me what home NAS to buy” version, here is the short list.

1. DIY bare metal NAS for homelab nerds

You want control, flexibility, and better hardware for the money.

- OS: Debian or another solid Linux base, with MergerFS for pooling and NFS or SMB exports

- Use case: Jellyfin or Plex library, backups, maybe a bit of general file storage

- Why it is the best fit:

- You are not locked into a vendor GUI

- You can pick quiet, low power parts instead of whatever the NAS vendor felt like shipping

- Easy to grow storage with bigger drives later rather than buying a whole new box

This is the setup I use and for me, it is the best NAS for a home media server. The Proxmox host talks to it over the network, and the NAS does one job and does it well.

2. Synology NAS for people who want easy mode

You want something that just works, and you do not want to learn MergerFS.

- Example: 4 to 8 bay Synology DiskStation for media and backup

- Use case: You want a simple web UI, snapshots, built in apps, and clean integration with Windows and macOS

- Why it is a good fit:

- Synology handles RAID, drive health alerts, and shares for you

- Great if you want to spend more money on hardware and less time learning Linux

- Perfect for “I run Jellyfin on another box, this thing just holds the files”

You give up some flexibility and pay a premium compared to DIY, but you get a nice, polished experience.

Is a 4-bay NAS running DSM with an easy setup, dual 2.5 GbE, M.2 NVMe slots for cache, ECC-capable RAM up to 32 GB, expansion to 9 bays, and roughly 500+ MB/s for multi-user streaming. Pick it over a DIY build if you want simple and reliable, since DSM gives you polished wizards, built-in backup and media apps, and hardware that just works.

3. Used server or small business box if you like deals

You are comfortable with louder gear and you want lots of bays for cheap.

- Examples: Used Dell, HP, or Lenovo small servers or business desktops with extra SATA added

- Use case: Big media libraries, lots of drives, budget conscious builds

- Why it can make sense:

- Older enterprise gear is cheap and still very capable

- Easy to stuff with drives and treat it as a dedicated storage tank

- Run Debian or your favorite NAS OS and treat it like the DIY option above

You just have to watch power draw and noise. Great for a basement rack, not great for a studio apartment.

No matter which path you pick, the rule is the same:

Your media server should talk to a dedicated NAS box over the network, not share a boot drive with Proxmox and six LXCs that all panic if a mount is late.

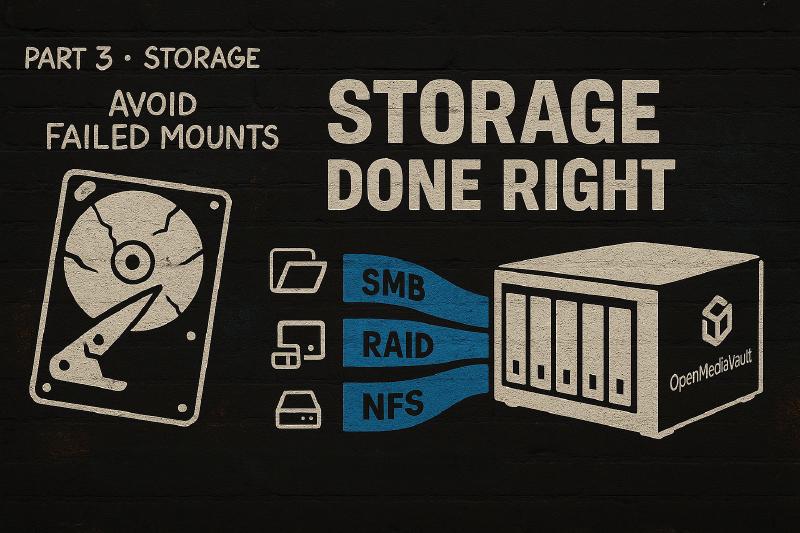

Storage In VMs: Just Because You “CAN”, Doesn’t Mean You Should

Let’s get this out of the way: running your NAS in a Proxmox VM is a bad idea. Running it in an LXC is an even worse idea.

Reddit will tell you: “It’s more efficient!” or “One box does everything, and it is amazing!”

I’m here to tell you they are wrong and what you’ve actually built is a house of cards where storage, the foundation of your entire media stack depends on:

- A hypervisor booting correctly

- VM startup order being predictable

- Mounts being added and mounted to the Proxmox host correctly (My biggest issue)

- Network initialization happening before mount attempts

- No Proxmox updates changing any of the above

You’re not being efficient. You’re optimizing for a $200 hardware savings while trading it for hours of maintenance, failed mounts, and streams that don’t work when you want them to.

I know because I did this for six months. I spent roughly 3 hours every week debugging mount failures, tweaking systemd scripts, adjusting delays, and reading Proxmox forums at midnight. That’s 78 hours over six months (Almost two full work weeks fighting an architecture that was fundamentally wrong).

The worst part? Each failure was only a 10-15 minute fix. But they happened constantly. Reboot the host? Roll the dice. Proxmox update? Hope your mounts still work. Add a new container? Maybe it boots before the NAS is ready, maybe it doesn’t.

/etc/fstab and can’t mount the shares because the VM hasn’t even started. Mounting shares later isn’t as straightforward as it sounds. Any workarounds you apply afterward just treat the symptoms without addressing the root cause. I exhausted every solution I could find across Google, Reddit, and the Proxmox forums. Some provided marginal improvements, but none fully resolved the problem.

The Real Cost

Let me break down what this “efficient” setup actually cost me:

- 78+ hours of debugging over six months

- Multiple service outages where Jellyfin showed “Library Empty” because mounts succeeded but pointed to empty directories

- Constant user complaints about streams dying or libraries disappearing

- Mental overhead of “will this reboot break everything?”

What did I gain by running storage in a VM? Absolutely nothing. I saved a small amount of money on power, not enough to make up for my time. That’s it.

The Time My Storage Failed Me (And Why I Finally Fixed It)

Here is what finally convinced me to do this right.

The Reboot Lottery

Power outage, kernel update, Proxmox update, stuck containers can all cause the need to reboot the Proxmox host. When the host is rebooted… Proxmox comes back up. NAS VM starts… but something’s off. The VM boots, the drives mount inside the VM, but Proxmox’s automount fails to see the NFS share. Jellyfin LXC mounts /mnt/media to an empty local directory. Users see “Library Empty.”

I SSH in, manually remount, restart containers. Fixed in 10 minutes.

But this wasn’t a one-time thing. This happened a few times a week with different variations:

- Sometimes the VM booted too slowly

- Sometimes Proxmox tried to mount before the NFS server was actually serving

- Sometimes it worked perfectly

- Sometimes my custom delay scripts helped, sometimes they didn’t

I tried:

- Systemd mount delays (worked sometimes)

- Custom scripts that pinged the NAS before mounting (race conditions remained)

- Automount with longer timeouts (helped but didn’t fix it)

- Tweaking VM boot order and priority (marginal improvement)

Nothing worked reliably. Because the problem wasn’t the configuration, it was the architecture.

That’s when I realized: this is insane. I’m spending hours maintaining mount orchestration on a system that should “just work.” The storage shouldn’t be a VM. It shouldn’t depend on a hypervisor. It should boot first and serve files. Period.

The VM Race Condition From Hell (What’s Actually Happening)

Here’s what was happening in my Proxmox host every reboot:

- Proxmox boots

- VMs start launching - based on boot order/priority settings

- My NAS VM begins booting - this takes time: OS boot, network init, NFS server start

- LXC containers start

- Containers try to mount

/mnt/mediavia NFS from the Proxmox host (Bind mounts) - Three possible outcomes:

- ✅ NAS is ready → mount succeeds → everything works

- ❌ NAS isn’t ready → mount fails → services break

- ⚠️ Mount succeeds but NFS isn’t serving yet → mount points to empty directory → “Library Empty”

That third one is the nastiest because everything LOOKS like it worked. The mount command succeeded. The directory exists. But there’s no data because the NFS server inside the VM hasn’t finished starting yet.

Why This Is Fundamentally Broken

The hypervisor doesn’t know or care about your application dependencies. Proxmox sees:

- NAS VM (priority: normal)

- App containers (priority: normal)

- Network is up

- Start everything

It doesn’t understand that your Jellyfin container NEEDS the NAS to be fully operational before it can function. You can try to encode this with systemd dependencies, boot delays, ping scripts, and health checks but, you’re still fighting the architecture.

The solution isn’t better orchestration. It’s removing the orchestration entirely.

LXCs Are Even Worse (Seriously, Don’t)

I never tried running my NAS in an LXC. But I’ve seen people attempt it on Reddit and Proxmox forums, and it’s a nightmare every single time.

Why people try it:

- “LXCs are lighter than VMs!”

- “I don’t need full VM overhead for a file server!”

- “I can bind-mount drives directly!”

Why it fails spectacularly:

No Real Hardware Access

LXCs share the host kernel. You can pass through devices, but you’re not getting true hardware access like bare metal. Running ZFS in an LXC? You’re trusting the container layer to not screw up your file systems. Running SMART monitoring? Good luck with device passthrough being consistent.

The Same Mount Race Conditions, But Worse

The LXC has to start, the storage daemon has to initialize, the NFS/SMB server has to start, and THEN other containers can mount. You’ve just recreated the VM problem with less isolation and more ways for it to break.

What Actually Happens

Search r/Proxmox for “LXC NFS” or “LXC storage” and you’ll find a number of posts like this:

- “My LXC can’t see the drives after reboot”

- “Permissions are broken after Proxmox update”

- “SMART data isn’t available in the container”

The pattern is always the same: someone tries to be clever, runs storage in an LXC to “save resources,” and ends up with a fragile, unreliable mess.

Don’t do it.

Why Dedicated Bare Metal Is The Only Sane Option

When I moved my storage to bare metal Debian, here’s what changed:

Predictable Boot Order (Finally)

It boots, drives spin up, XFS filesystems mount, MergerFS pools them, NFS server starts. All of this happens BEFORE my Proxmox host even starts booting.

By the time Proxmox comes online and containers try to mount /mnt/media, the NAS has already been serving files and waiting. Zero race conditions, timing dependencies, or failed mounts.

No More Orchestration Hell

I deleted:

- Custom systemd mount units with delays

- Ping-before-mount scripts

- VM boot order priorities

- Health check containers

- All the “clever” solutions I built to work around a broken architecture

The NAS boots. It serves files. Proxmox mounts them. That’s it. No orchestration needed.

Fault Isolation

When I need to rebuild my Proxmox host or test new versions, my storage stays online. It keeps serving files to the containers that are still running. When I need to add drives or run maintenance on the NAS, I take it offline briefly but, my compute layer isn’t impacted. I do however, reboot the compute node after NAS maintenance just to ensure everything is working.

Before, everything was tangled together. Proxmox down = storage down. Storage issues = compute issues. It was all one fragile system. I made it even worse because at the time my router was also in a VM on the same Proxmox host. So, every reboot also took down my internet too. Don’t be like me.

Zero Maintenance

In eight months since the migration:

- Proxmox reboots: 30+

- NAS mount failures: 0

- Hours spent debugging storage: 0

- Streams interrupted by storage issues: 0

The NAS just works. I literally forget it exists until I need to add more drives.

What I Gave Up

Nothing. Absolutely nothing.

I added one more box to my rack (an old desktop PC I already owned). That’s it. No performance penalty. No feature loss. No additional complexity. Actually, LESS complexity because I removed all the mount orchestration.

Adding a low power CPU to run the NAS had a minimal impact to the power bill (About 40-60W).

Right now one of the best price per GB you can find. Also has a 2-year warranty.

“But My NAS VM Works Fine” (For Now)

If you’re reading this thinking “my NAS VM is stable, this doesn’t apply to me,” let me ask:

- Have you rebooted your Proxmox host this month? Did everything come back up cleanly?

- Have you updated Proxmox recently? Did your mounts still work after?

- Do you have multiple containers depending on storage? Do they all mount reliably?

- Have you tested what happens during a power failure and cold boot?

- Can you reboot your compute layer without taking storage offline?

If you answered “yes” to all of these, congratulations! You’ve either gotten extremely lucky or you’ve spent dozens of hours building complex orchestration to paper over the architectural problems.

But here’s the thing: it works until it doesn’t.

You’re not running a reliable system. You’re running a system that hasn’t failed YET. And when it does fail during a family movie night, you’ll wish you’d built it right from the start.

The Real Question

What are you actually gaining by running storage in a VM?

- Saving one hardware box? (An old desktop PC costs $50-200 used)

- “Efficiency”? (You’re trading hardware efficiency for operational chaos)

- Easier management? (Is debugging mount failures “easier” than running a separate box you never touch?)

Be honest: you’re not optimizing for reliability or simplicity. You’re optimizing for… what, exactly?

The Architecture Comparison

Let me show you what I ran vs what I run now.

WRONG: What I Built First

Proxmox Host (one physical box)

├── NAS VM (OMV)

│ ├── Boots at ??? (depends on VM priority)

│ ├── Needs: OS boot → network init → storage mount → NFS/SMB start

│ └── Serves: NFS/SMB shares back to Proxmox host

│

├── Jellyfin LXC

│ ├── Boots at ??? (fast, because LXC)

│ ├── Tries to mount: /mnt/media (from Proxmox host bind mount)

│ └── Result: ⚠️ Maybe works, maybe empty directory, maybe fails

│

├── Sonarr LXC

│ ├── Boots at ???

│ ├── Tries to mount: /mnt/media (from Proxmox host bind mount)

│ └── Result: ⚠️ Maybe works, maybe empty directory, maybe fails

Problems:

- Boot order is non-deterministic (depends on VM/LXC startup speed)

- NAS VM has multiple initialization steps before it’s ready to serve

- Containers mount before checking if NFS is actually serving

- Reboots are a lottery

- Proxmox updates can change everything (rare, but still a thing)

RIGHT: What I Run Now

NAS Box (dedicated bare metal Debian)

├── Hardware: Intel G3220, 16GB RAM, LSI HBA, 10GbE NIC

├── Software: Debian Trixie, XFS per drive, MergerFS pooling

├── Boot: Second. After the dedicated Router

└── Serves: NFS shares to network (always available)

↓ (2.5GbE Network)

Proxmox Host (separate physical box)

├── Boot: Thrid (after NAS is already serving)

├── Mounts: /mnt/media via NFS from NAS box (always succeeds)

│

├── Jellyfin LXC → bind mounts /mnt/media → ✅ always works

└── Sonarr LXC → bind mounts /mnt/media → ✅ always works

Benefits:

- Boot order is deterministic (NAS second, always)

- No dependencies between storage and compute layers

- Reboots are predictable

- Proxmox updates don’t affect storage

- Fault isolation (one system failing doesn’t take down the other)

Network Details

Connected via SODOLA 8-Port 2.5Gb managed switch:

- NAS: 10GbE NIC (downlinks at 2.5Gb to switch)

- Proxmox: 2.5GbE NIC

- Throughput: ~280 MB/s sustained (vs ~110 MB/s on 1GbE)

- Multiple simultaneous 4K streams + NZB downloads + backups = no congestion

How I Migrated

Here’s exactly how I moved from “NAS VM on Proxmox” to “NAS on bare metal.”

What I Started With

- Proxmox host with LSI HBA in IT mode

- 8x drives passed through to OMV VM

- Containers mounting via NFS from Proxmox host (bind mounts)

- Constant race condition issues

What I Did

1. Built the new NAS box first

- Old desktop: Intel G3220, Gigabyte GA-Z87X-D3H motherboard, 16GB DDR3

- Installed Debian Trixie

- Added 10GbE NIC (Intel X520-DA1, $30 used on eBay)

- Configured it on the network with a static IP

2. Tested NFS serving (before moving drives)

- Set up NFS exports on the new Debian box

- Verified Proxmox could mount from it

- Made sure permissions and paths matched my existing setup

3. Scheduled downtime (Friday night)

- Shut down all containers/VMs on Proxmox

- Shut down the NAS VM

- Shut down the Proxmox host

4. Moved the hardware

- Pulled the LSI HBA from Proxmox host

- Moved all 8 drives

- Installed HBA and drives in the new NAS box

- Connected power, network

5. Brought up storage

- Booted the NAS box

- Drives appeared as

/dev/sdathrough/dev/sdh - Mounted each drive:

mount /dev/sdX /mnt/diskX - Configured MergerFS to pool them:

/mnt/disk* /mnt/media - Set up NFS exports pointing to

/mnt/media - Updated

/etc/fstabso everything mounts on boot

6. Brought Proxmox back online

- Booted Proxmox

- Updated

/etc/fstabto point to the new NAS IP instead of VM mount - Verified NFS mounts succeeded

- Started containers one by one

- Checked Jellyfin library, verified all media was accessible

Total downtime: ~2 hours (most of it was physical drive transplant and cable management)

LSI 9300-8i HBA Controller

LSI SAS3008 9300-8i HBA Controller is a high-performance disk controller suitable for media server data storage needs. With a transfer rate of up to 12Gbps, this unit provides reliable and fast data storage solutions.

Price: $30 - $40

Contains affiliate links. I may earn a commission at no cost to you.

What I Learned

- Test the new setup before you commit. I verified NFS serving worked before I moved drives.

- Expect the first boot to take longer. XFS file system checks took a few minutes per drive.

- Plan for worst case. I kept the Proxmox host ready to take the HBA back if something went catastrophically wrong (it didn’t).

What Your NAS Actually Needs To Do

Redundancy

At minimum: ability to survive drive failure without data loss.

I’ll be honest: I ran without parity for two years. Why? Because 95% of my data was movies and TV shows I could redownload. Then I started adding family videos and photos - stuff I can’t get back. That’s when I decided to add SnapRAID for parity (I’m adding this next month if HDD prices come down).

Use any scheme you’re comfortable with: parity, mirrors, or just redundancy for critical bits. Just know what you’re protecting and what you’re willing to lose.

Protocols That Work

- NFS for your virtualization or Linux services

- SMB/CIFS for Windows/macOS clients

- SFTP for remote access or backup jobs

Your NAS must seamlessly integrate with your compute and network layers. My setup serves NFS to Proxmox hosts and SMB to Windows machines for manual file management. It just works.

Smart Mounting Strategy

This is where most people screw up:

- Stick to consistent paths:

/mnt/media/movies,/mnt/media/showsnot/media1,/media2,/random-drive-name - Enable automount so on reboot everything comes up in order (systemd handles this well on Debian)

- Keep temp, transcode, and download directories separate from your main pool to avoid runaway writes destroying your media drives

- Use SMART monitoring and actually test restore workflows. You’ll thank yourself later

I run smartctl -a /dev/sdX monthly on each drive. One drive showed reallocated sectors climbing. Replaced it before it died.

Software Choices—What I’ve Used and What Actually Works

Here’s what I’ve tested and what I actually run.

| NAS OS | Strengths | Weaknesses | My Take |

|---|---|---|---|

| TrueNAS | Strong ZFS support, snapshot capabilities, enterprise features | Needs more RAM/hardware, steeper learning curve, overkill for media | Great if you need ZFS. I don’t. |

| Unraid | Flexible drives, less hardware-intensive, nice GUI | License cost ($59-$129), lower performance on some tasks | Popular for good reason, but I’m cheap and wanted full control |

| OpenMediaVault | Lightweight, easy to set up, web GUI | May lack advanced features, felt restrictive to me | Where I started in a VM, outgrew it fast |

| Debian + MergerFS | Lightweight, total control, exactly what you need | No GUI (you use SSH and config files), learning curve | This is what I run. Took a weekend to learn, now it’s bulletproof. |

I originally tried OpenMediaVault in a VM. It worked, but every time I wanted to do something slightly custom, I fought with the GUI or the update system. Moved to straight Debian with MergerFS and NFS/SMB servers. No GUI, just config files and systemd. Steeper learning curve? Yes. But now I understand exactly how everything works and nothing is hidden behind abstraction layers. When something breaks (rare), I know exactly where to look.

ZFS vs MergerFS - What’s Best For a Media Server?

Let’s cut the fluff: you don’t always need ZFS for a media-server-only setup.

ZFS: Enterprise Grade Redundancy

RAID-Z/RAID-Z2, checksums, snapshots, send/receive. Requires serious RAM (1GB per TB is the common recommendation) and prefers ECC memory.

I almost started with ZFS because that’s what the internet said to use. Researched ECC RAM, planned my vdev layout, read the entire FreeBSD handbook section on ZFS. Felt very enterprise. It also had an enterprise price tag.

Then I realized: if a drive dies, I can just re-download everything in a weekend. Why am I treating Bob’s Burgers S04 like a production database?

When ZFS is worth it:

- You’re running VMs, databases, things that can’t be redownloaded

- You can afford to buy 6 or more identical drives in one purchase (I couldn’t justify this).

- You have irreplaceable data (family photos, business files)

- You want snapshots and send/receive for backups

For me? 80% of my data was re-downloadable movies and shows. ZFS was overkill. I make backups of the irreplaceable files and keep them in three locations (Local, External HDD, and Cloud).

MergerFS: Flexible, Media Friendly

MergerFS pools drives into /mnt/media regardless of size.

Why it works for media:

- Add drives without rebuilding (I started with 3 drives, now at 8)

- Any size, any speed, different brands, no “matched set” needed

- If a drive dies, you lose only what was on that drive, not the whole pool

- Reads are fast, writes go to whichever drive has space (configurable policies)

- Works on bare metal Debian with zero virtualization overhead

The catch: No real-time parity. If a drive dies, that data is gone unless you have backups or add parity separately (see SnapRAID below).

| Feature | ZFS | MergerFS |

|---|---|---|

| Real-time parity | ✅ Yes | ❌ Not built-in |

| Flexible drive sizes | ❌ No (same-size vdevs) | ✅ Yes |

| File-level recovery | ❌ Generally no | ✅ Straightforward |

| Hardware overhead | High (RAM, ECC preferred) | Low (runs on anything) |

| VM-friendly | ⚠️ Possible but problematic | ✅ But run it on bare metal anyway |

| Ideal for media | Overkill | Perfect fit |

Verdict:

- If your NAS is mostly media you can re-download - go MergerFS

- If your NAS hosts business-critical or irreplaceable data - go ZFS

MergerFS + SnapRAID: The Best of Both Worlds

MergerFS gives me flexible pooling. But what about redundancy?

Enter SnapRAID: parity for files that don’t change often (perfect for media).

How it works:

- I dedicate 1 drive as a parity drive (the largest drive)

- SnapRAID calculates parity across the pool on-demand (I’ll run it nightly via cron)

- If a drive dies, I can rebuild from parity

- Unlike RAID, parity is calculated when YOU tell it to, not in real-time

- If TWO drives die before I sync parity… yeah, I lose some files. But that’s the trade-off for flexibility

I’m adding this to my setup next month because I finally have data I can’t easily redownload (family videos, photos). For movies and TV? I didn’t bother for two years. The cost/benefit wasn’t there.

Why this works on bare metal: SnapRAID needs direct drive access for parity calculation. Running it in a VM means the hypervisor is between your file system and the drives - adding latency, complexity, and potential corruption. On bare metal? It just works.

Hardware Recommendations

It’s gear time. Because yes, you can buy this now. And yes, you can target budget or beast mode depending on how deep your wallet is.

| Tier | Specs | Use Case | What I’d Buy Today |

|---|---|---|---|

| Budget | 2-4 bays, low-power CPU, basic RAM | Cold storage, archives | Old desktop with 2-4 SATA ports. Intel Pentium or i3, 8GB RAM. Purpose: hold files, serve NFS. Cost: $50-100 used. |

| Balanced | 4-8 bays, decent CPU, 16GB RAM | Streaming + moderate load | This is basically my setup. Rosewill Helium NAS case ($90), Intel G3220 or newer i3 ($50 used), 16GB RAM, LSI HBA in IT mode ($50 used), 8x drives. Quiet, expandable, fits under a desk. Total: ~$300 + drives. |

| Beast | 8+ bays, modern i5/i7, 10GbE NICs | Multi-user, 4K/8K, heavy lift | Rosewill Helium NAS or bigger case, i5-12400 or better, 32GB RAM, 10GbE NIC, quality PSU. Overkill for most, perfect if you’re streaming to 5+ users simultaneously. Cost: $600-800 + drives. |

| Simple | Synology 4-8 bay NAS | Just want it to work, don’t want to DIY | DS920+, DS1522+, or whatever’s current. You pay more, but it works out of the box. No shame in this. Cost: $400-800 + drives. |

The Rosewill Helium NAS ATX mid-tower is a budget-friendly case built with storage in mind. It fits a standard ATX motherboard, has space for 10 3.5" hard drives as well as room for HBAs or SATA expanders. For a DIY NAS this case lots of room for growth without paying server-chassis prices.

Critical hardware note: Whatever you buy, it needs:

- Direct SATA connections (no USB)

- Enough RAM for your OS + file system cache (8GB minimum, 16GB better)

- Quality power supply (drives are expensive, don’t cheap out on PSU)

- Network connectivity that matches your needs (2.5GbE or 10GbE if you have heavy traffic)

Network: The Bottleneck Nobody Talks About

1GbE = 125 MB/s theoretical, ~110 MB/s real-world.

That’s fine for one 4K stream (40 Mbps) or even 20 simultaneous 1080p streams. Sounds like plenty, right?

Except you’re also:

- Downloading a new TV Series

- Running backups to your PBS box (Part 5 of this series)

- Scanning new media into Jellyfin

- Maybe transcoding if someone’s on a slow client

Now you’re maxing out that 1GbE link. Streams buffer. Your spouse asks if the internet is broken. You check your bandwidth graphs and realize: it’s not the ISP, it’s your internal network.

What I Did

Added a $30 Intel X520-DA1 10GbE NIC to my NAS box (used on eBay) and a $60 SODOLA 8-Port 2.5Gb managed switch.

Provides eight 2.5GbE ports, a quiet fanless metal chassis, and a simple web UI with essentials like 802.1Q VLANs, QoS, and link aggregation. It unlocks multi-gig LAN speeds for NAS and desktops while keeping segmentation clean and power draw low.

Result:

- NAS has 10GbE NIC (downlinks at 2.5Gb to the switch)

- Proxmox has 2.5GbE NIC

- Sustained throughput: ~280 MB/s (vs ~110 MB/s on 1GbE)

- Multiple 4K streams + torrents + backups = no congestion

Streams never buffer. Backups run 2.5x faster. Torrents don’t fight with Jellyfin for bandwidth.

If you’re building from scratch: Just buy a motherboard with 2.5GbE built in. The Intel i226-V chipset is solid and adds $0 to motherboard cost these days. Many boards in the $100-150 range include it standard.

When to consider 10GbE:

- 5+ simultaneous users streaming 4K

- Heavy backup workloads (multiple TB per day)

- You transcode on a separate box and move large files constantly

- You’re a data hoarder moving TBs between systems regularly

For most home setups? 2.5GbE is the sweet spot. Cheap, no special cables needed (works on Cat5e), massive improvement over 1GbE.

Intel X520-DA1 10GbE NIC

This 10Gtek network card is designed for use with Intel X520-DA1 routers and features a maximum data rate of 10 Gbps. With one SFP+ port and PCIe x8 interface, this card provides high-speed connectivity for your network.

Price: $30 - $40

Contains affiliate links. I may earn a commission at no cost to you.

My network setup:

- NAS: Intel X520-DA1 10GbE NIC with DAC cable to switch

- Switch: SODOLA 8-Port 2.5Gb managed switch

- Proxmox: Onboard 2.5GbE

- Clients: Mix of 1GbE and 2.5GbE

Total cost: ~$90 for the upgrade. Best $90 I’ve spent on this build.

Storage Layouts That Make Sense

Turning hardware into a predictable, reliable stack.

Directory Structure

Use clear, consistent paths:

/mnt/media/

├── movies/

├── shows/

├── music/

└── books/

Not /media1/stuff, /driveB/movies, /bob-likes-anime/. Keep it simple. Keep it consistent.

Why this matters: when you’re debugging at 3 AM (you won’t be, because bare metal doesn’t have race conditions, but hypothetically), you want obvious paths. When you’re setting up a new container, you want to know exactly where /mnt/media/movies lives.

Separate Temp and Archive

This is critical and most people get it wrong.

- Transcode, temp, and download directories: Put these on SSDs or a separate spindle pool that you don’t care about. You do NOT want qBittorrent hammering your archive drives with random writes while incomplete downloads get moved around.

- Finished media: Goes to the archive pool (the big slow drives via MergerFS). This is read-mostly workload. Movies get added once, read many times, rarely deleted.

My setup:

NAS Box:

├── 500GB SSD: Debian 13, NFS, SMB, MergerFS and Snapraid (that's it don't over complicate it).

└── 93TB MergerFS pool (Mix of 3, 6, 14, and 24TB Drives): Static Storage only.

Any Docker containers, downloads, or Jellyfin transcodes are on the compute node with fast redundant data storage.

SABnzbd downloads to compute node’s fast storage. When a download completes, Sonarr/Radarr move it to /mnt/media/tv or /mnt/media/movies on the MergerFS pool. The compute node’s storage absorbs all the random write punishment. The spinning drives just handle sequential writes when media is added and sequential reads when streaming.

Mount Strategy

- Mount read-only where possible for older content (reduces risk of accidental deletion). My Jellyfin server can only read the media files on the NAS.

- Set up rsync or backups to your backup box (Part 5 of this series) so you’re not relying on storage redundancy for your important files.

- Run SMART monitoring:

smartctl -a /dev/sdXmonthly, check for reallocated sectors, pending sectors, or UDMA CRC errors - Schedule pool scrubs if using SnapRAID (I’ll run

snapraid syncnightly,snapraid scrubweekly once I set it up) - Test restores: Seriously. Shut down, pull a drive, boot, verify you know how to identify which drive failed and how MergerFS handles it. Learn how recovery works BEFORE you need it at 3 AM.

When your storage setup is done well - you forget it’s there. When it fails - you will be ready.

My Actual MergerFS Config

For reference, here’s what I run on bare metal Debian:

Drive mounts in /etc/fstab:

/dev/disk/by-id/wwn-0x5000c500b531bbcc-part1 /mnt/Pool/Disk1 xfs defaults 0 0

/dev/disk/by-id/wwn-0x5000c500e4505355-part1 /mnt/Pool/Disk2 xfs defaults 0 0

/dev/disk/by-id/wwn-0x5000c500e50b9986-part1 /mnt/Pool/Disk3 xfs defaults 0 0

/dev/disk/by-id/wwn-0x5000c500e82476d9-part1 /mnt/Pool/Disk4 xfs defaults 0 0

/dev/disk/by-id/wwn-0x5000cca295caac7a-part1 /mnt/Pool/Disk5 xfs defaults 0 0

/dev/disk/by-id/wwn-0x5000cca2a1dcb6af-part1 /mnt/Pool/Disk6 xfs defaults 0 0

/dev/disk/by-id/wwn-0x50014ee20d104997-part1 /mnt/Pool/Disk7 xfs defaults 0 0

MergerFS pool:

/mnt/Pool/Disk* /media/Storage fuse.mergerfs direct_io,defaults,allow_other,dropcacheonclose=true,inodecalc=path-hash,category.create=mfs,minfreespace=50G,fsname=storage 0 0

NFS exports in /etc/exports:

/media/Storage/ 172.27.0.0/24(all_squash,anongid=1001,anonuid=1000,insecure,rw,subtree_check,fsid=0)

That’s it. No GUI. No abstraction layers. Just Linux doing what Linux does best: serving files reliably.

Common Mistakes (That I Made So You Don’t Have To)

- Running storage as a VM on your compute host:

- Race conditions, mount order chaos, reboots that break everything. This was my life for six months. Don’t do it.

- Running storage in an LXC:

- Even worse than a VM. Filesystem passthrough nightmares, permission issues, SMART monitoring doesn’t work right. Just don’t.

- Using USB externals without redundancy:

- One disconnect = data loss. If the data matters, it needs to be on real SATA connected to a real motherboard.

- Mixing different drive sizes in a ZFS pool:

- Kills performance, wastes capacity. ZFS wants matched vdevs. (MergerFS doesn’t care. One of many reasons I prefer it for media.)

- Ignoring ECC RAM when building ZFS:

- Silent bit flips can corrupt your “perfect” checksummed pool. If you go ZFS, get ECC. Or just use MergerFS and skip the RAM requirements.

- Never testing restores:

- You don’t have a backup until you’ve tested the restore. Same goes for RAID/parity rebuilds. Pull a drive while the system is OFF (don’t hot-swap unless you know what you’re doing) and verify you can identify and recover from the failure.

- Trusting your setup without monitoring:

- Run SMART checks monthly. Watch your logs. Drives give warnings before they die—if you’re paying attention.

- Thinking “I’ll add redundancy later”:

- Later never comes. If the data matters NOW, protect it NOW. I’m guilty of this (took me 2 years to decide on SnapRAID), but at least I knew what I was risking.

The Only Time Storage In A VM Makes Sense

Let me be fair: is there ANY scenario where running storage in a VM is acceptable?

Maybe - MAYBE - if:

- You’re running TrueNAS as a VM with direct PCI passthrough of an HBA

- It’s THE ONLY VM on a dedicated Proxmox host (no competing VMs/LXCs)

- You’ve pinned the VM to specific CPU cores, so it always has resources

- You’ve configured Proxmox to boot the storage VM first with significant delays before anything else starts

- You’ve tested cold boot scenarios extensively

But even then: what are you gaining? You’ve added a hypervisor layer between your storage and your compute. You’ve introduced complexity, potential for race conditions, and dependency on Proxmox functioning correctly.

The question isn’t “can you make it work?” The question is “why bother?”

My answer: There is no good reason. The “one box does everything” dream is just that a dream. You’re trading $50-100 of old hardware for hours of maintenance and fragility you don’t need.

If you want to run TrueNAS or OpenMediaVault, run them on bare metal. If you want Debian + MergerFS, run it on bare metal. Give storage its own box and let it do its job without interference.

What You Should Do Right Now

If you’re currently running storage in a VM or LXC:

Option 1: Build A New NAS Box (Recommended)

- Get cheap hardware: Old desktop, $50-100 on Craigslist/eBay, needs 4+ SATA ports

- Install Debian (or your preferred NAS OS—on bare metal)

- Set up NFS/SMB shares while your VM is still running

- Copy data from VM to new box (rsync, verify with diff/checksums)

- Test thoroughly (mount from Proxmox, verify Jellyfin sees everything)

- Cut over: Update Proxmox mounts to point to new NAS IP

- Shut down the VM forever

Downtime: ~2 hours for final cutover. Peace of mind: priceless.

Option 2: Repurpose Your Proxmox Host

If you only have one box:

- Back up your containers/VMs (you should be doing this anyway)

- Wipe Proxmox

- Install Debian as NAS on the bare metal

- Buy a second box for Proxmox (can be cheaper since it’s just compute)

- Rebuild your compute stack on the new Proxmox host

- Mount from the NAS

Yes, this requires buying hardware. But you’re separating concerns the right way. Storage on one box, compute on another. This is how it should be.

Option 3: Stay In VM Hell

Continue debugging mount failures, systemd dependencies, and race conditions. Spend 3 hours every few weeks troubleshooting why reboots break everything. Wonder why your spouse hates your “hobby.”

I don’t recommend this option.

Final Thoughts

I run 93TB of media on bare metal Debian with MergerFS. Eight drives of different sizes, XFS file systems, pooled with MergerFS. No parity yet (adding SnapRAID next month). 2.5GbE network via 10GbE NIC to SODOLA switch. NFS shares to Proxmox. SMB shares to Windows clients.

Hardware:

- Intel G3220 (old, low-power, sufficient)

- Gigabyte GA-Z87X-D3H motherboard

- 16GB DDR3 RAM

- LSI HBA in IT mode (direct drive access, no RAID controller nonsense)

- Intel X520-DA1 10GbE NIC

- Rosewill Helium NAS case (holds 10 drives, quiet, fits under a desk)

The box boots in 90 seconds. Serves files to two Proxmox hosts and a dozen containers. I haven’t touched it in months except to check SMART data.

Total cost: ~$2,000 over three years (includes drives, case, and network gear).

I haven’t had a mount failure in six months. I reboot my compute nodes whenever I want. Proxmox updates don’t scare me. Power outages resolve themselves. My storage just… works.

If your media library matters, and if you’re reading this, it does - give it its own box. Separate storage from compute. Pick an OS (I chose Debian + MergerFS, you might prefer OpenMediaVault or Unraid). Learn it. Build it right. Run it on bare metal.

You’ll thank yourself at 3 AM when the power comes back and everything just comes back online in the right order. Or better yet - you’ll sleep through it because there’s nothing to debug.

Stop running storage in VMs. Stop fighting race conditions. Stop spending weekends debugging mount failures.

Build it right. Run it on bare metal. Forget it exists.